-

1 количество взаимной информации в случайных величинах

количество взаимной информации в случайных величинах

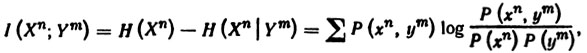

Мера уменьшения неопределенности случайной величины, возникающего вследствие того, что становится известным значение другой случайной величины, усредненного по знаниям последней; для дискретных случайных величин ее выражение имеет вид

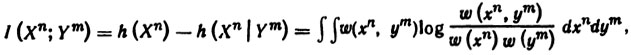

где суммирование ведется по всему множеству значений хn, уm случайных величин Xn, ym; для непрерывных случайных величин ее выражение имеет вид

где интегрирование ведется по всему множеству значений xn, уm случайных величин Хn, Уm.

Примечания

1. Вместо термина «количество взаимной информации в случайных величинах» иногда употребляют выражение «количество информации о случайной величине, содержащееся в другой случайной величине».

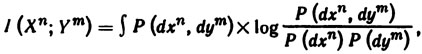

2. Общая форма математической записи выражения количества взаимной информации, справедливая в произвольном случае, имеет вид

где Р(хn, уm), Р(хn) и Р(уm) —вероятностные меры, заданные соответственно на множествах значений {хn, уm), хn и уm случайных величии (Xn, Уm), Хn и Уm.

[Сборник рекомендуемых терминов. Выпуск 94. Теория передачи информации. Академия наук СССР. Комитет технической терминологии. 1979 г.]Тематики

EN

Русско-английский словарь нормативно-технической терминологии > количество взаимной информации в случайных величинах

-

2 количество взаимной информации в случайных величинах

General subject: mutual information between two random variables (мера уменьшения неопределенности случайной величины, возникающего вследствие того, что становится известным значение другой случайной величины, усре)Универсальный русско-английский словарь > количество взаимной информации в случайных величинах

-

3 количество информации о случайной величине, содержащееся в другой случайной величине

General subject: mutual information between two random variables (мера уменьшения неопределенности случайной величины, возникающего вследствие того, что становится известным значение)Универсальный русско-английский словарь > количество информации о случайной величине, содержащееся в другой случайной величине

См. также в других словарях:

Mutual information — Individual (H(X),H(Y)), joint (H(X,Y)), and conditional entropies for a pair of correlated subsystems X,Y with mutual information I(X; Y). In probability theory and information theory, the mutual information (sometimes known by the archaic term… … Wikipedia

Multivariate mutual information — In information theory there have been various attempts over the years to extend the definition of mutual information to more than two random variables. These attempts have met with a great deal of confusion and a realization that interactions… … Wikipedia

Information theory — Not to be confused with Information science. Information theory is a branch of applied mathematics and electrical engineering involving the quantification of information. Information theory was developed by Claude E. Shannon to find fundamental… … Wikipedia

Information theory and measure theory — Measures in information theory = Many of the formulas in information theory have separate versions for continuous and discrete cases, i.e. integrals for the continuous case and sums for the discrete case. These versions can often be generalized… … Wikipedia

Two-factor authentication — (TFA, T FA or 2FA) is an approach to authentication which requires the presentation of two different kinds of evidence that someone is who they say they are. It is a part of the broader family of multi factor authentication, which is a defense in … Wikipedia

Information bottleneck method — The information bottleneck method is a technique introduced by Tishby et al [1] for finding the best tradeoff between accuracy and complexity (compression) when summarizing (e.g. clustering) a random variable X, given a joint probability… … Wikipedia

Entropy (information theory) — In information theory, entropy is a measure of the uncertainty associated with a random variable. The term by itself in this context usually refers to the Shannon entropy, which quantifies, in the sense of an expected value, the information… … Wikipedia

количество взаимной информации в случайных величинах — Мера уменьшения неопределенности случайной величины, возникающего вследствие того, что становится известным значение другой случайной величины, усредненного по знаниям последней; для дискретных случайных величин ее выражение имеет вид где… … Справочник технического переводчика

Quantities of information — A simple information diagram illustrating the relationships among some of Shannon s basic quantities of information. The mathematical theory of information is based on probability theory and statistics, and measures information with several… … Wikipedia

Models of collaborative tagging — Many have argued that social tagging or collaborative tagging systems can provide navigational cues or “way finders” [1][2] for other users to explore information. The notion is that, given that social tags are labels that users create to… … Wikipedia

Correlation and dependence — This article is about correlation and dependence in statistical data. For other uses, see correlation (disambiguation). In statistics, dependence refers to any statistical relationship between two random variables or two sets of data. Correlation … Wikipedia